/ Posts / Control the Entire Data Science Process With DataRobot

Control the Entire Data Science Process With DataRobot

by N/A - Dominick Amalraj

on October 2, 2020

As a leader in automated machine learning, DataRobot is well known for its ability to produce powerful and complex machine learning models to solve some of the most challenging issues businesses face. These models enforce better decision making, mitigates risk, and allow organizations to plan for the future with more insights on what will occur.

The updates that DataRobot have implemented this year have elevated them from not only being a great model building application, but a platform that encompasses the entire data science process. These updates now allow users to critique use cases that would be most impactful to the organization, prepare data to be ready for model building through a code free visual ETL tool, and track the efficiency of productionized models better.

1. Use Cases

A common obstacle in becoming machine learning-driven is the inability to select useful and attainable use cases. Any team or individual developing machine learning models needs to understand the ROI as well as the feasibility in achieving them.

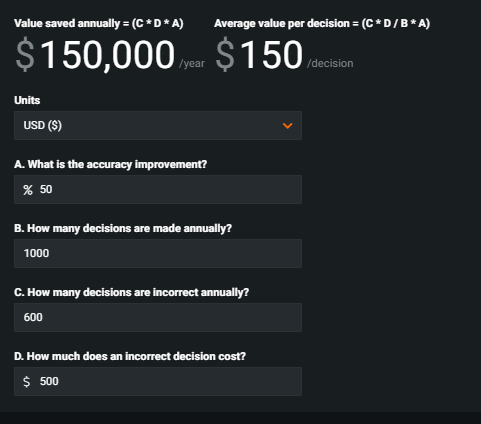

DataRobot users are now able to add use cases within the auto-ML platform to be able to understand the value and progress made in their various projects. Users can provide general information on the project such as a description of the project and potential target dates. As depicted in the image below, users can input information on how many decisions are made regarding the use case, what is the ideal accuracy improvement for a potential model, the current incorrect predictions made, and the potential cost of each incorrect decision.

With this information, DataRobot is able to calculate the potential ROI annually and business impact an efficient model can have to your organization. On top of that, users can input the feasibility of a use case. Too many organizations spend time on projects with little value or low chance of success. By having the business impact and feasibility noted within the DataRobot platform, organizations can better understand the projects in their pipeline and determine the best plan of action.

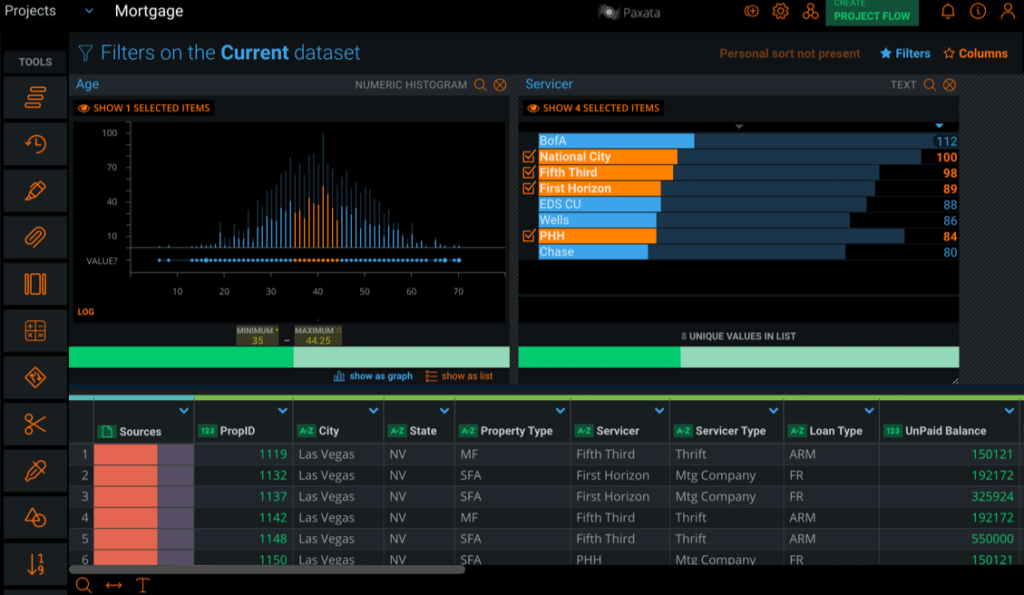

2. Paxata

DataRobot has recently purchased Paxata, a data wrangling tools that prepares data with little to no code and a visual ETL interface. With the ability to connect to all of your data sources, Paxata allows technical and non-technical users develop complex data models that are ready for model building. Users are able to select steps to conduct in their data preparation making some of the trickiest joins, transformations, and formatting changes done with a few clicks. With the visual ETL tool all steps within the process are clearly noted and users are able to go back to previous steps if changes are needed. Additionally, Paxata connects with the DataRobot's AI catalog to send finalized datasets ready for model building to make the process simple to start DataRobot projects. Paxata can also retrieve and store predictions from deployed models in DataRobot. These stored predictions can be accessible through REST APIs or by being exported to a desired database. With data preparation being a vital part of any machine learning project, DataRobot has added this component to accelerate the data preparation process and give users the ability to create optimal datasets with a user-friendly interface.

3. Accuracy and Model Deployments

Many data science teams have spent too much time working on previous models. This can include evaluating the model's performance and retraining models to get the effects of new data. DataRobot has implemented an accuracy component and the ability to change deployments to resolve these issues. The accuracy component is available in all deployed models. All that is required is an association ID, which can be considered the unique series ID or feature the model is getting predictions for. So this can be a patient ID for a model predicting heart disease or a SKU level for a demand forecast in the retail space. DataRobot is already storing predictions over time and now can track the accuracy over time as new data is ingested in the system. This provides data science teams the ability to understand the performance of a model in a quick and easy manner.

Along with the accuracy component, users are now able to take deployments that are currently in production and replace the model with one that is more up-to date or that has been retrained. This give users the ability to not halt any productionized outputs while able to move on to more efficient and precise models. Datarobot also has the ability to have two models in deployment and productionize the model that has performed better historically. These are all ways that datarobot has improved the way teams can track, evaluate and productionize current models.

All essential tasks and phases in one platform!

Machine learning has many moving parts. From understanding the right problem to go after, preparing a complex data model, being able to monitor the model's performance, and even retraining models to get more accurate predictions. DataRobot has always been ahead of the game in achieving high performing machine learning models, but with these updates users can control all aspects in the data science process and lead their organization in becoming machine learning driven.